Your customers look for you online.

If your page ranks well, they’ll find you—hooray. But if Google gives you a crapola search engine ranking score, they won’t.

And if customers can’t find you, they find your competition instead. And when that happens, you figuratively set your hopes and dreams of making money on f**king fire.

A glowing SERP score is about tethering your hopes and dreams to search engine optimization genius.

The truth is, there are over 5 billion searches on Google every day, and the only score that wins page one visibility is an A+.

So if you care about getting found and connecting with your customers in a way that impresses them so much they want to buy from you, step one is to get your face in front of them.

From there, it’s a domino effect.

The good news is that getting the right eyeballs on your stuff doesn’t take intuition, high-level theory, or “knowing a guy.”

It takes methodical SEO marketing precision.

And that’s what we’ll go over here—all the ways to take your rank straight to the bank.

By the end, you’ll know the difference between on-page, off-page, and technical SEO ranking factors. You’ll also learn how to optimize the top eleven ranking signals that matter most, so Google straps a jetpack on your SERP position.

Get brand new SEO strategies straight to your inbox every week. 23,739 people already are!Sign Me Up

What are search engine ranking factors?

The Google algorithm uses ranking factors to determine if your site has the cajones to fend for a first-page spot on the results page (SERP).

Those cajones have to do with relevancy.

There are hundreds of search engine ranking factors that measure if your page is relevant. If it is, you get the first-class parking spot where future fans see you when they enter the search queries you expect them to.

Start by crossing off the items on your SEO best practices checklist. Once that’s done, move on to the ranking signals that Rankbrain puts in the spotlight.

Rankbrain is the current iteration of Google’s algorithm.

The algorithm changes all the time, which means rank factor priority shifts over time as Google attempts to fine-tune its selection of sites that score high for quality, trustworthiness, and performance.

The good news is you don’t have to excel at every search engine ranking factor. Many of those factors are subitems of more significant signals that will naturally happen if you tackle the bigger item properly.

There are eleven search engine ranking factors that make the biggest impact on your site’s position in SERP.

We covered the first five in our SEO best practices post:

- Keyword optimization (based on search intent)

→ improve your click-through rate (CTR) with targeted keyword research that finds semantic long-tail keywords.

→ write sensational snippets (title tag and meta tag), then monitor your search results with Google Search Console

→ avoid keyword stuffing

- High-quality content (create unique, new content & refresh old content)

→ Use hierarchies (H1, H2, H3, etc.) and alt text to add structure to on-page content

→ avoid duplicate content

- User Experience (design your page with white space and keep your message as concise as possible to lower bounce rate, increase dwell time, and boost loyalty)

- Link building (anchor text, internal links, external links, and backlinks)

- Page Speed (the faster the site speed, the better the user experience and your rank)

We cover the other six search engine ranking factors in this post.

Here’s how you point a giant flashing neon sign at your page beyond SEO best practices:

- Domain authority (E-A-T expertise, authority, trustworthiness)

- Secure Socket Layer (SSL)

- XML sitemap

- Robots.txt

- Schema markup

- Device (mobile-friendliness)

We’ll get to these, but first, let’s look at how Google decides your score.

What determines Google’s search ranking?

There are two ways Google evaluates your performance when it comes to search engine ranking factors:

- Crawling

- Indexing

Crawling

Googlebots visit your site and crawl it to index new and updated pages. There are independent mobile and desktop crawlers and, not surprisingly, the mobile crawler is the primary crawler.

That’s because 60% of the global population (4.66 billion) use the internet, and 92.6% (4.32 billion) use it via their mobile device.

Google’s algorithm determines which of the billions of sites it crawls and what pages it will fetch based on sitemaps. Once there, all links are crawled and updated, and new links and broken links are noted.

But Google doesn’t crawl some pages:

- Those blocked using the robots.txt nofollow command (these pages might still index if another page links to them).

- Private pages that require a login

How to improve crawling

- Submit a sitemap

- Ask Google to crawl your pages when you update them

- Use logical, readable URL page paths and internal links

- Make sure all crawl-ready pages are labeled “follow” in your robot.txt file

Indexing

When the Googlebot crawls your page, it processes content, tags, attributes (alt-text), and schema markup. It flags duplicate content that hasn’t been sorted out with canonicals (which it crawls less frequently—with a scowl), and it avoids crawling pages with no index and no follow directives in the robot.txt file.

How to improve indexability

- If you have similar pages (different languages, or two slightly different versions for mobile and desktop), tell Google which one is your canonical page (the most authoritative main page).

- Use page hierarchies and attributes

- Use directives in your robots.txt file

- Use schema markup

Types of Google ranking factors

As you optimize for search engines, some of that optimization happens right on the page, some of it happens off the page, and some of it happens under the page.

These three categories are called on-page, off-page, and technical SEO. Combine the ranking factors in each of these categories, and you’ll build an SEO-friendly website.

Google likes those.

On-page SEO

On-page ranking factors relate to keywords, the quality of your content, and user experience.

Off-page SEO

Google measures off-page SEO indicators from other pages that point to your page. That happens with backlinks, guest posts with a linked bio signature, webinars where you’re a featured guest with a site credit, etc.

Technical SEO

Technical rank signals measure your site’s performance overall, looking at things like speed, sitemaps, URL structure, and markup.

The top search engine ranking factors

1. Domain Authority (DA)

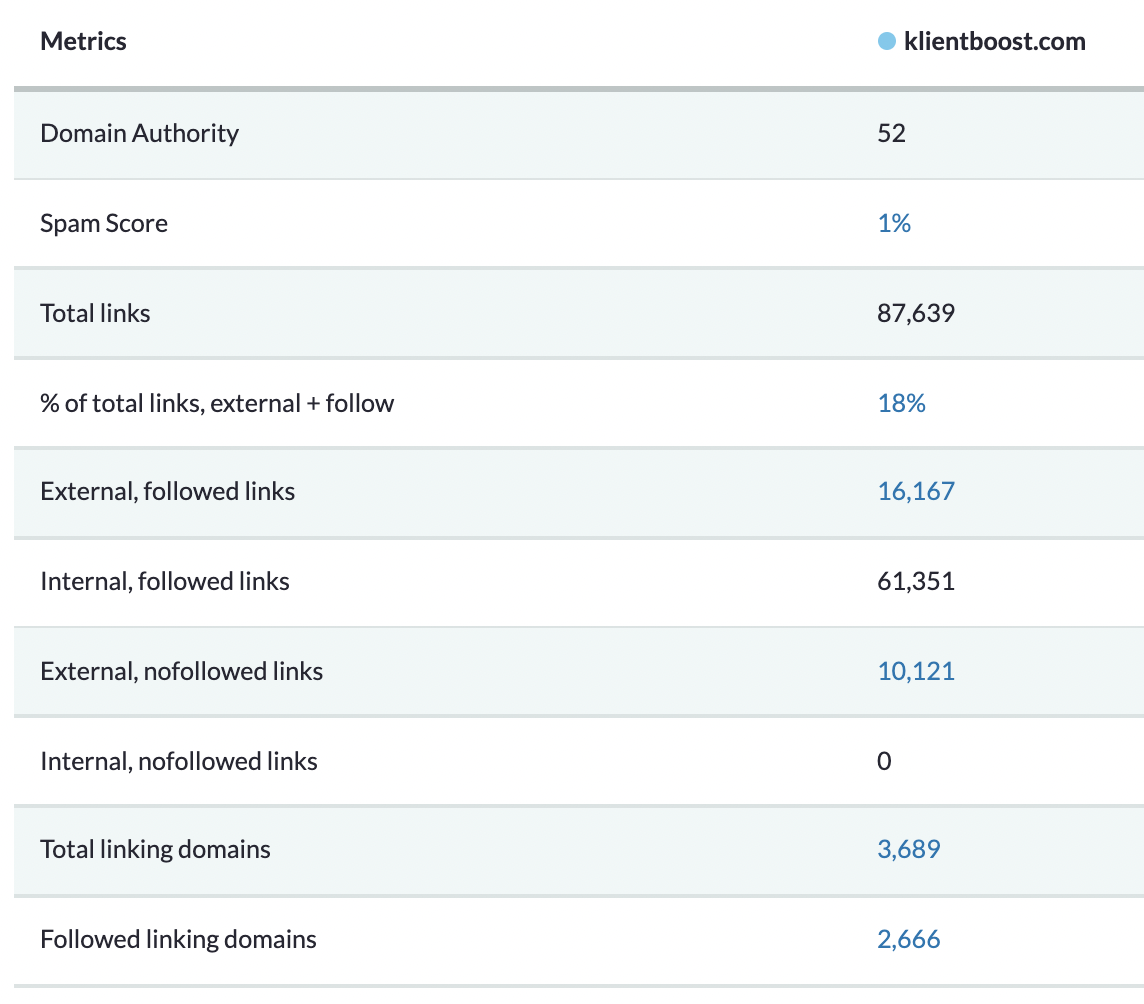

Domain Authority puts a grade between 1 and 100 on the strength of your website, and this grade correlates to your SERP position.

Moz introduced the DA score, which is based mainly on the links pointing to your site. Today, it’s a staple rating.

A low DA score means your site doesn’t have many high-quality links pointing to it, which means it will have a hard time ranking in SERP for competitive keywords.

A high DA score means you have a higher potential to rank above your competitors in the SERP rankings relative to other indexed domains.

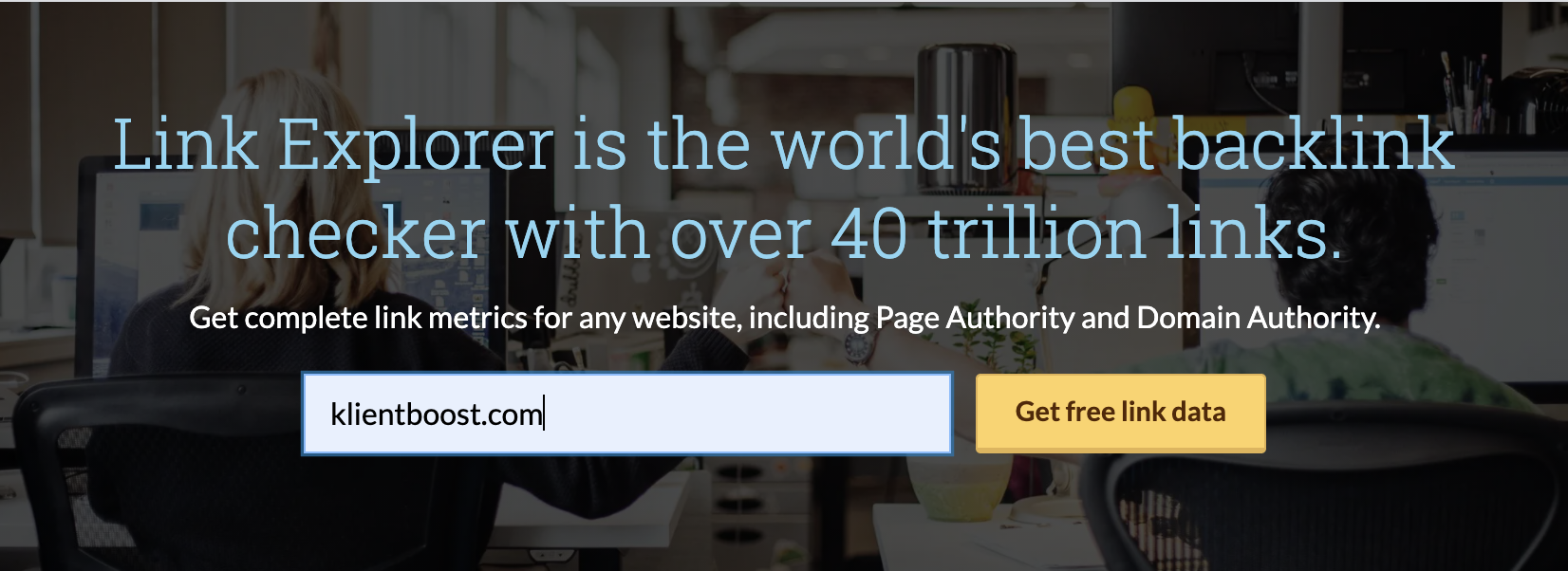

Find your DA score using Moz’s Link Explorer tool.

Enter your URL to see your score and your link health.

What’s a good domain authority?

- Weak DAs are between 0-20

- A DA of 20-30 is okay

- A DA between 30-50 is good

- A strong DA score is somewhere in the 50-60 range

- 60 or higher is an excellent DA

Getting that 60+ score means spending a lot of time link building and getting high-quality links from high DA sites. It’s not as much about quantity as it is about quality.

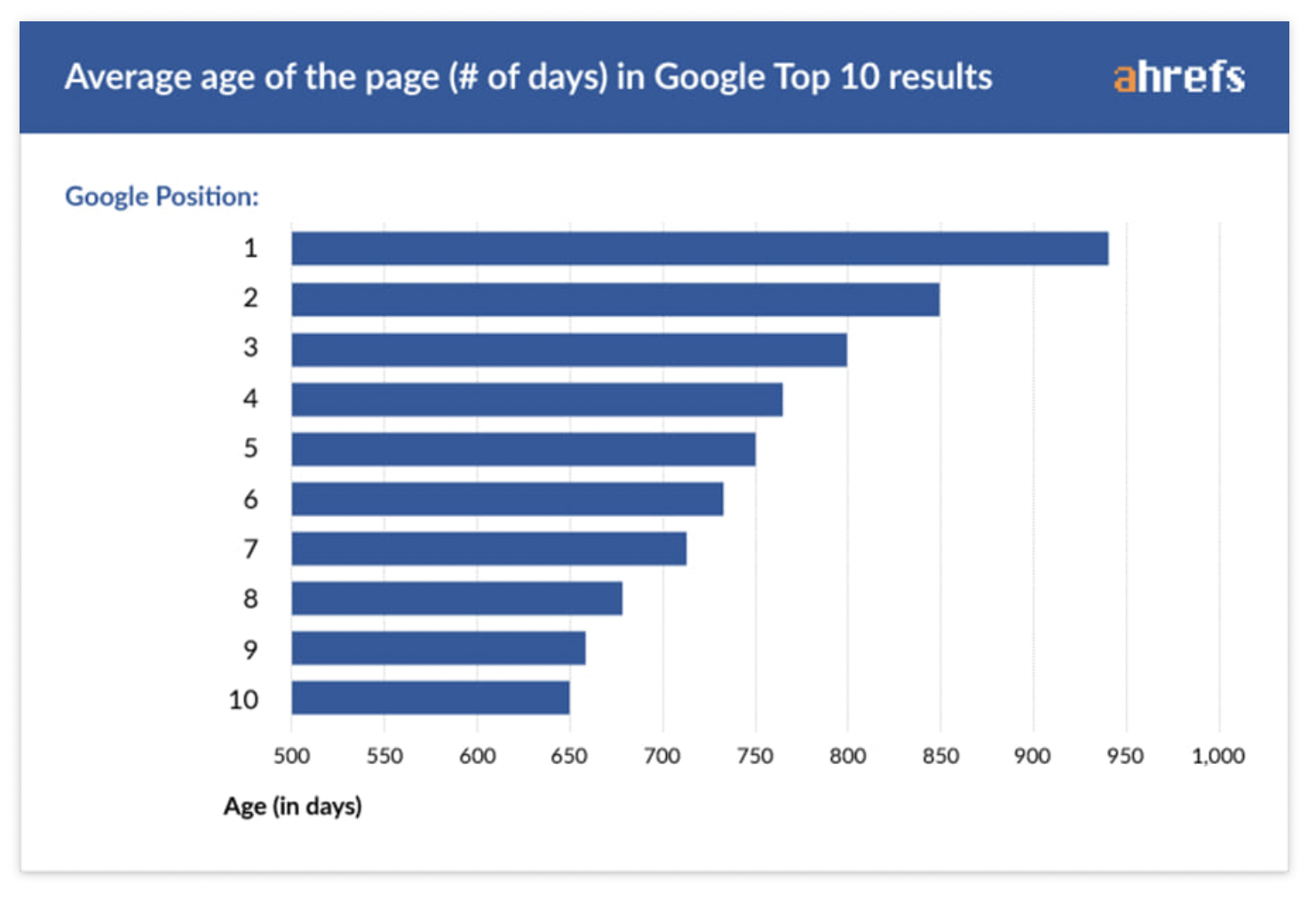

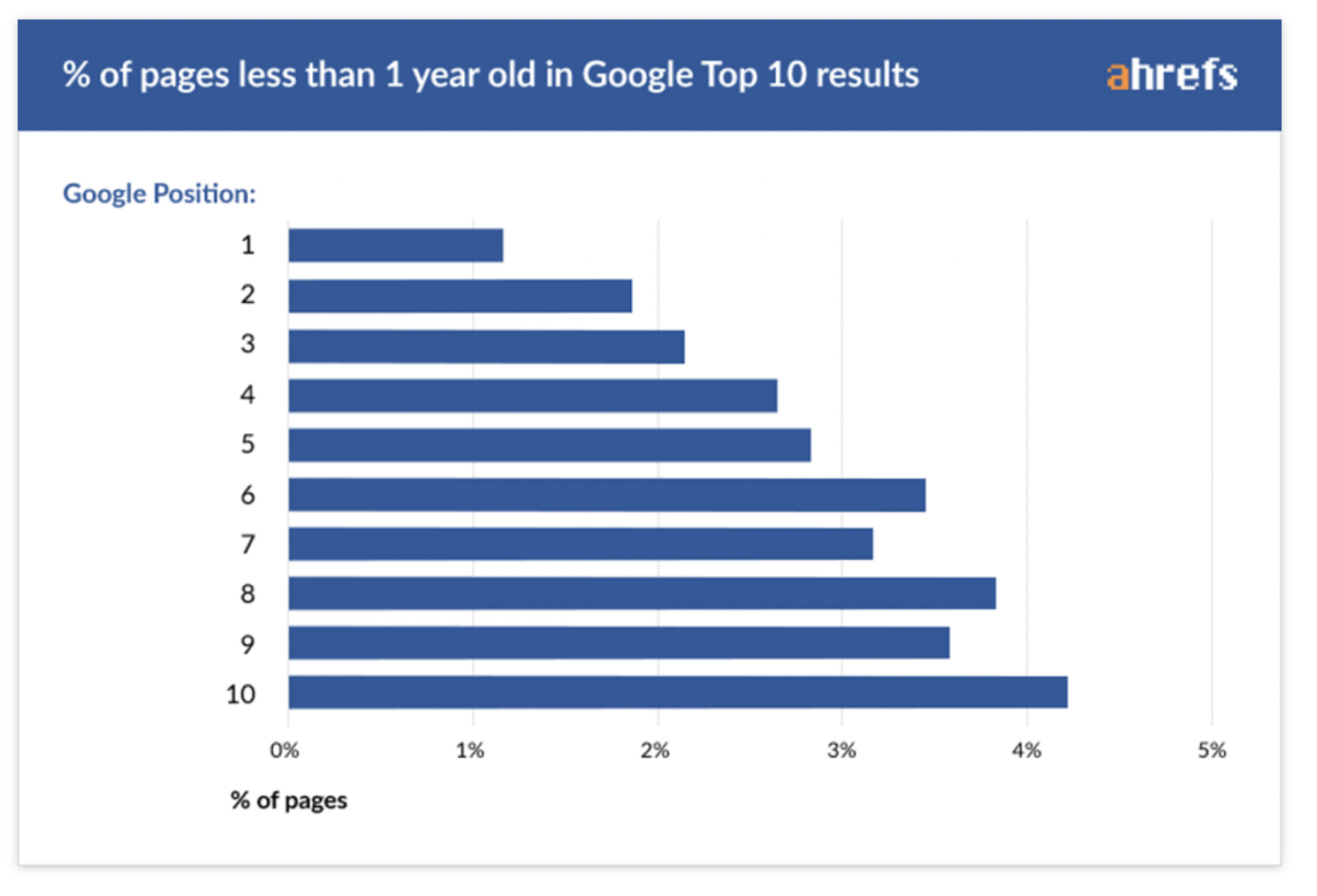

Domain age matters

You can’t control how long your site’s been around, but that affects your score and ranking factor. Older domains tend to rank better, so keep that in mind if your site is brand new, and know that you’ll watch your DA rise with effort over time.

Authority matters for SERP.

And authority comes from great content (on-page SEO) and the weight given to your site by outside sources (off-page SEO).

Authority also comes from the reputation of the site’s creator, also known as E-A-T.

What’s E-A-T?

E-A-T stands for expertise, authority, and trustworthiness.

Google tries to understand what your content says and who says it—especially for YMYL (your money or your life) sites.

YMYL sites display results that affect your wealth (financial websites) or your health (medical websites).

Google wants to get the accuracy of YMYL sites right, and E-A-T is a major indicator:

Expertise

Is the site’s author qualified to speak about the topic? Do they have certified skills?

Authority

Does this source best answer a searcher’s question, or does someone else answer it better?

Trustworthiness

Does honesty prevail, and are the answers unbiased?

You can increase the E-A-T of your site with

- an about page and a contact page

- links to certificates and credentials

- guest posts (which create high-quality backlinks)

- responding to reviews (including the negative ones)

2. Security

https is where it’s at.

Seven years ago, Google decided its algorithm would put site encryption at the core. Today, it’s the most important ranking factor.

How do you get that special “s” at the end of the hypertext transfer protocol (http) at the beginning of your website URL? You encrypt your site by asking your internet host for an SSL certificate.

A secure socket layer (SSL) certificate guarantees that the information exchanged between users and your site is protected by privacy, authentication, and integrity.

In plain speak:

Privacy

Data is encrypted, so any hacker who tries to intercept data will see a jumbled bag of characters that’s nearly impossible to decrypt.

Authentication

When a user surfs pages of your site, a communication handshake happens between where the site is hosted and the user’s device. That authentication makes sure both devices are legit.

Integrity

The authentication handshake verifies that the device sending out the data and the device receiving the data are legitimate. But the SSL certificate also verifies that the data makes it to the recipient without being altered or tampered with during transmission.

Search engines want to provide the best result to searchers, and that means sending them to trustworthy sites.

If your site begins with http:// and not https://, it isn’t secure, and Google won’t trust it.

It takes five minutes to set up an SSL certificate wherever your site is hosted, and most SSL certificates are free. There is no excuse not to have one, so get one.

3. XML Sitemaps

Bots can’t crawl your site if they can’t find it.

A sitemap helps them find it.

XML stands for eXtended Markup Language. An XML sitemap is a special document that lists all the pages on your website to provide the Googlebot crawlers with an overview of the content (how it’s organized).

Google loves seeing your site architecture and knowing where every piece of content is and how it relates to each other.

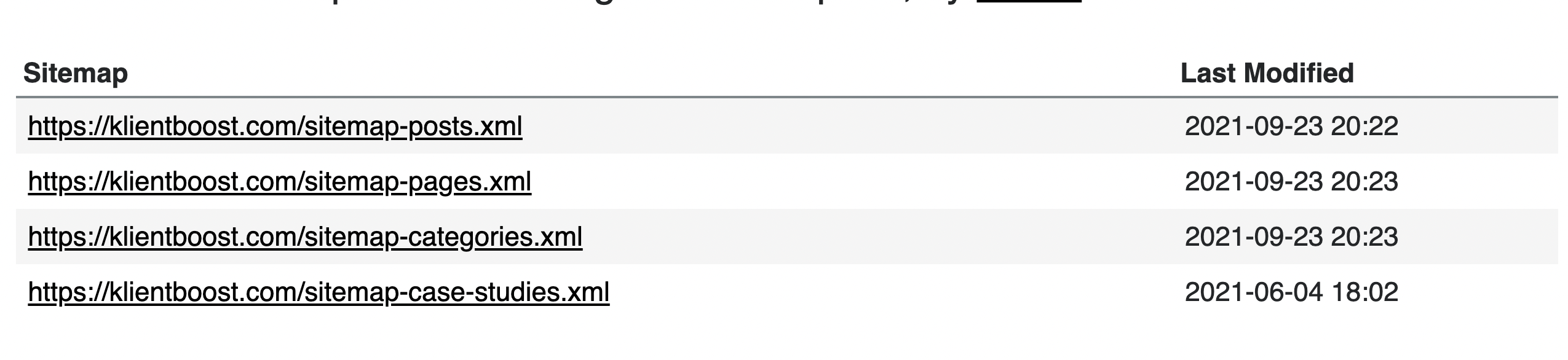

Do you already have one? Look for it here:

yourdomain.com/sitemap.xml or yourdomain.com/sitemap_index.xml

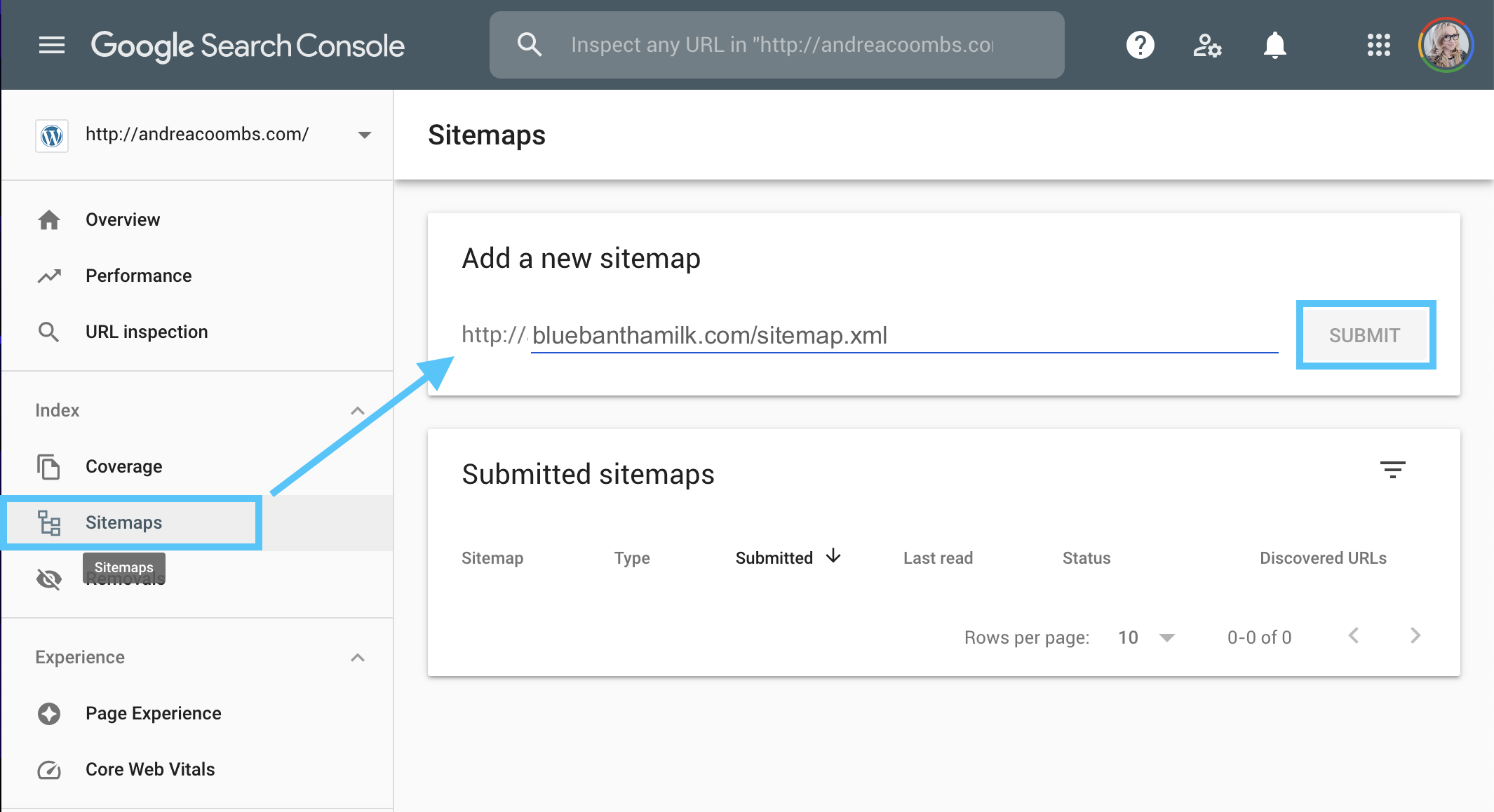

If you don’t, generate one with a sitemap plugin like Yoast and submit it to Google Search Console (GSC).

But what if there are pages on your site that you don’t want to include in the sitemap because you don’t want them to appear in Google search results?

You can set that up with your robots.txt file.

4. Robots.txt

Don’t want Google to see a page or crawl it for links? Tell the robots that with your robots.txt file.

The robots meta tag lives at the root of your site and gives you granular control to manage how specific pages should or should not appear in Google search results.

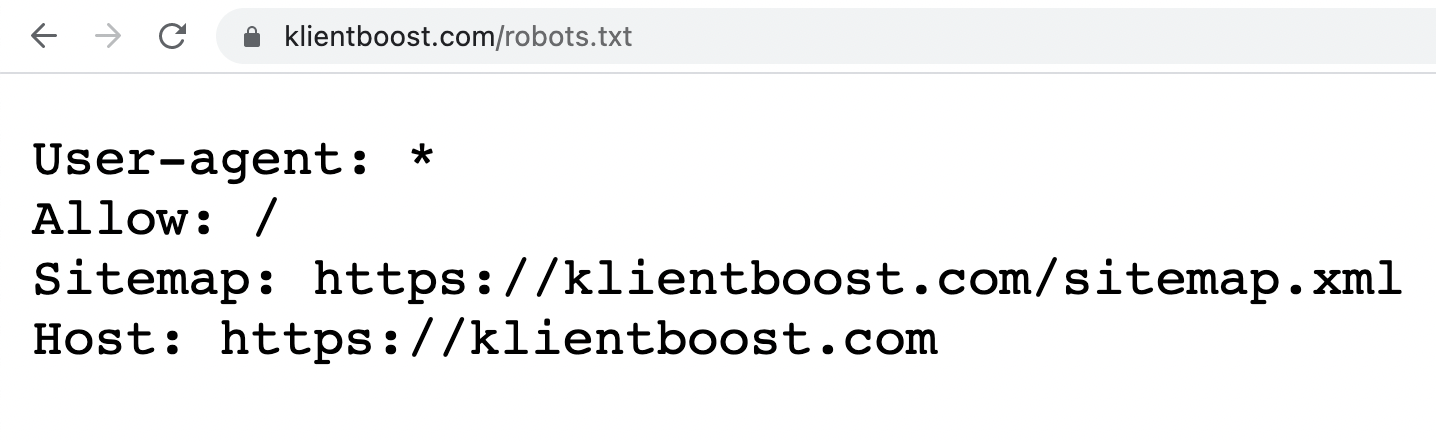

Type this into a browser: www.yoursite.com/robots.txt

Do you see something like this?

Sites default to allow unless you specify otherwise.

To stop crawlers from indexing one of your pages, place a line of code in the <head> section of that one webpage:

Start by adding a noindex tag.

Noindex means “don’t show this page in search results.”

<!DOCTYPE html>

<html>

<head>

<meta name="robots" content="noindex"/>

</head>

</html>

Also add a nofollow link.

Nofollow means “don’t follow the links on this page either.” This one’s important because, without it, Google uses the links on the page to discover other linked pages.

Make it easy on yourself by combining multiple tag instructions separated by commas.

This robot.txt command prevents robots from indexing the page or following any of the links on the page:

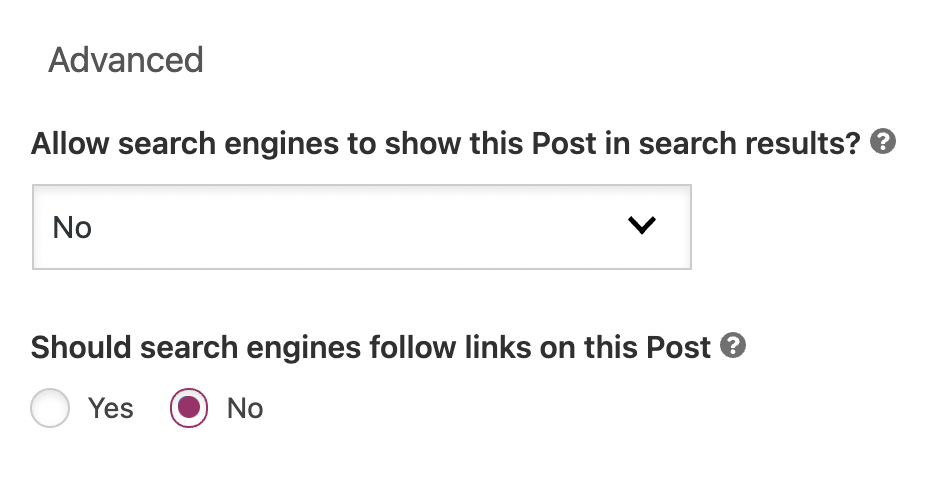

Make it even easier on yourself by skipping the raw coding and using the Yoast plugin’s advanced feature:

There’s a lot you can do with robots.txt, but for our high-level overview, we’ll cover one more useful command: disallow.

If you don’t want the Googlebot to crawl a single web page (maybe you have a page where you test out new things?), disallow it like this:

User-agent: *

Disallow: /tester_file.html

5. Schema Markup

Whoa, now.

Schema’s getting deep into technical SEO.

Schema markup is structured data—the kind of data Google admires because it tells the bots what your site is about.

Structuring your pages with schema markup identifies the type of content on that page.

Think of schema markup as a title and description under your page that the Googlebots understand. It’s microdata that improves robot readability.

Add schema markup to enhance your search engine results (SERP) display with rich snippets.

There are 792 types of schema, but we talk about the eleven most important schemas here. You can also check out a beginner’s guide to schema at Semrush.

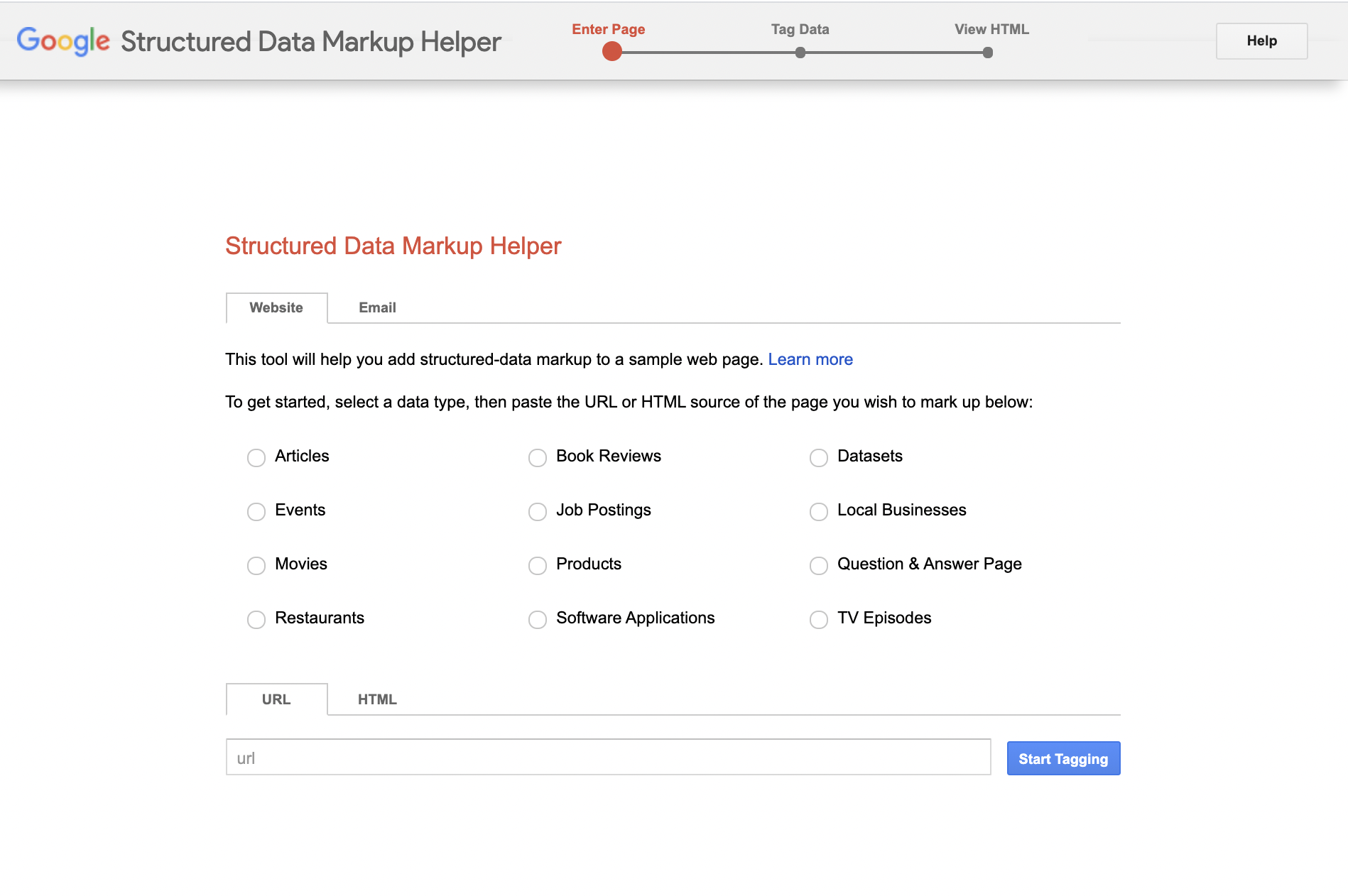

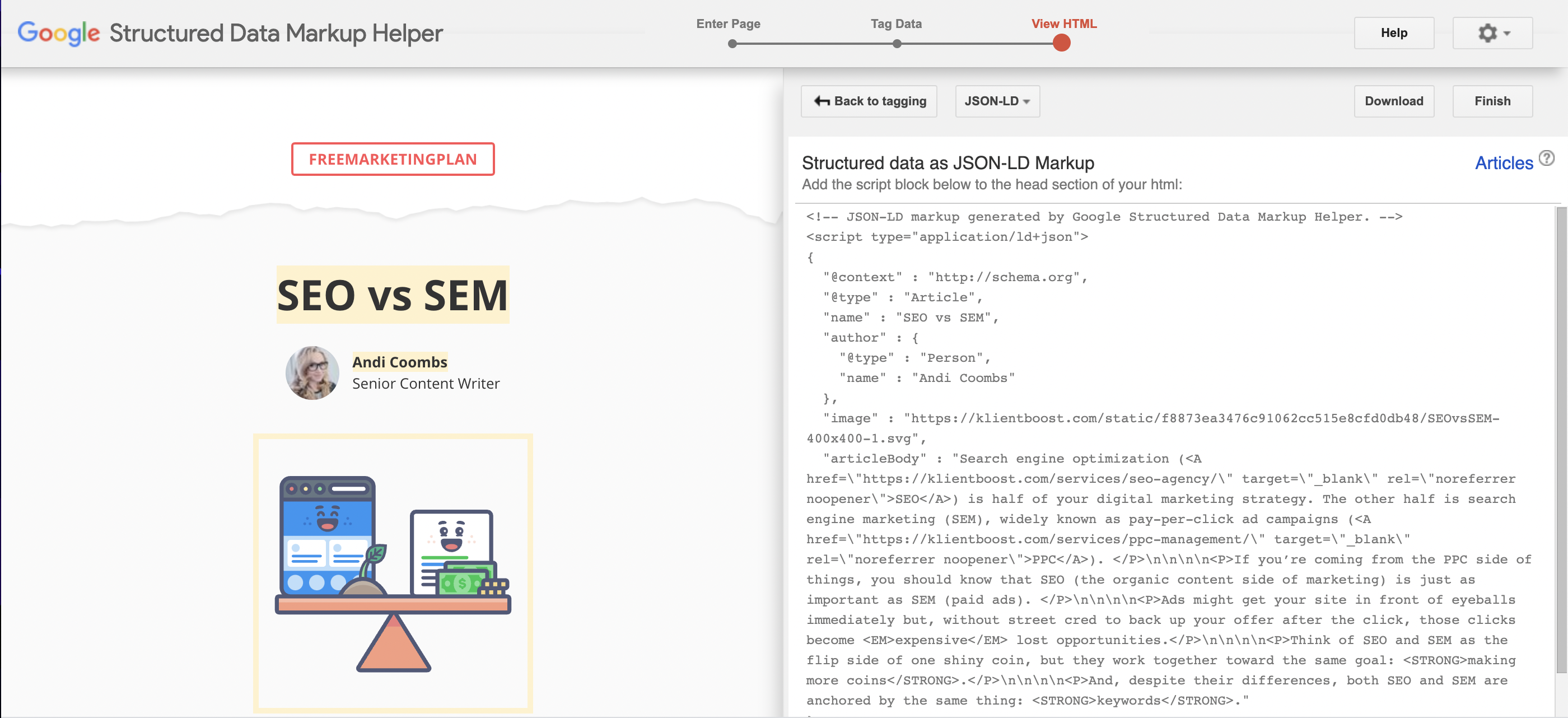

To make things simple, Google gives you a free tool to help structure your data.

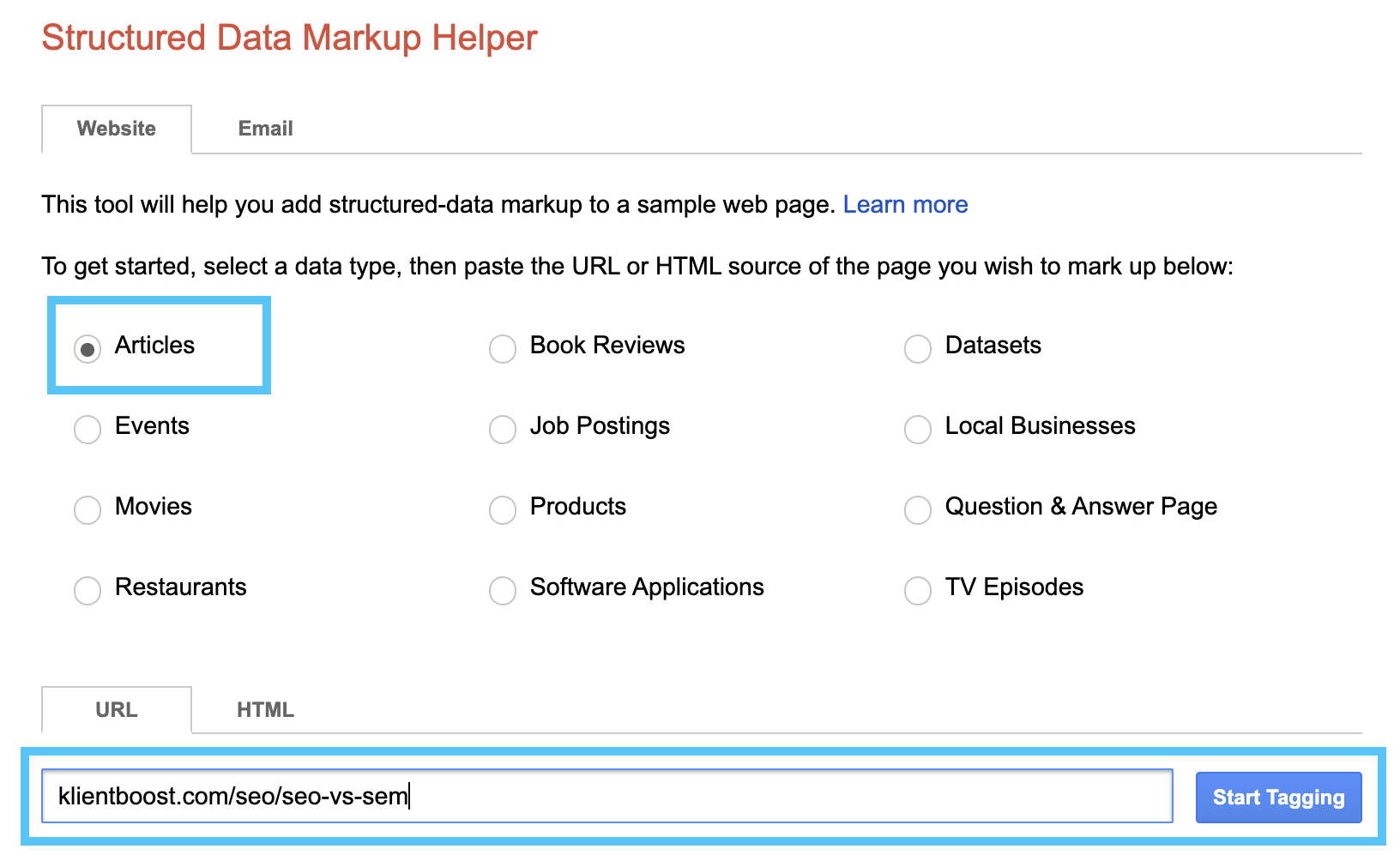

Step one: Select your page data type, enter your URL, and click the Start Tagging button. In this example, we’ll tag a KlientBoost blog article about SEO vs. SEM.

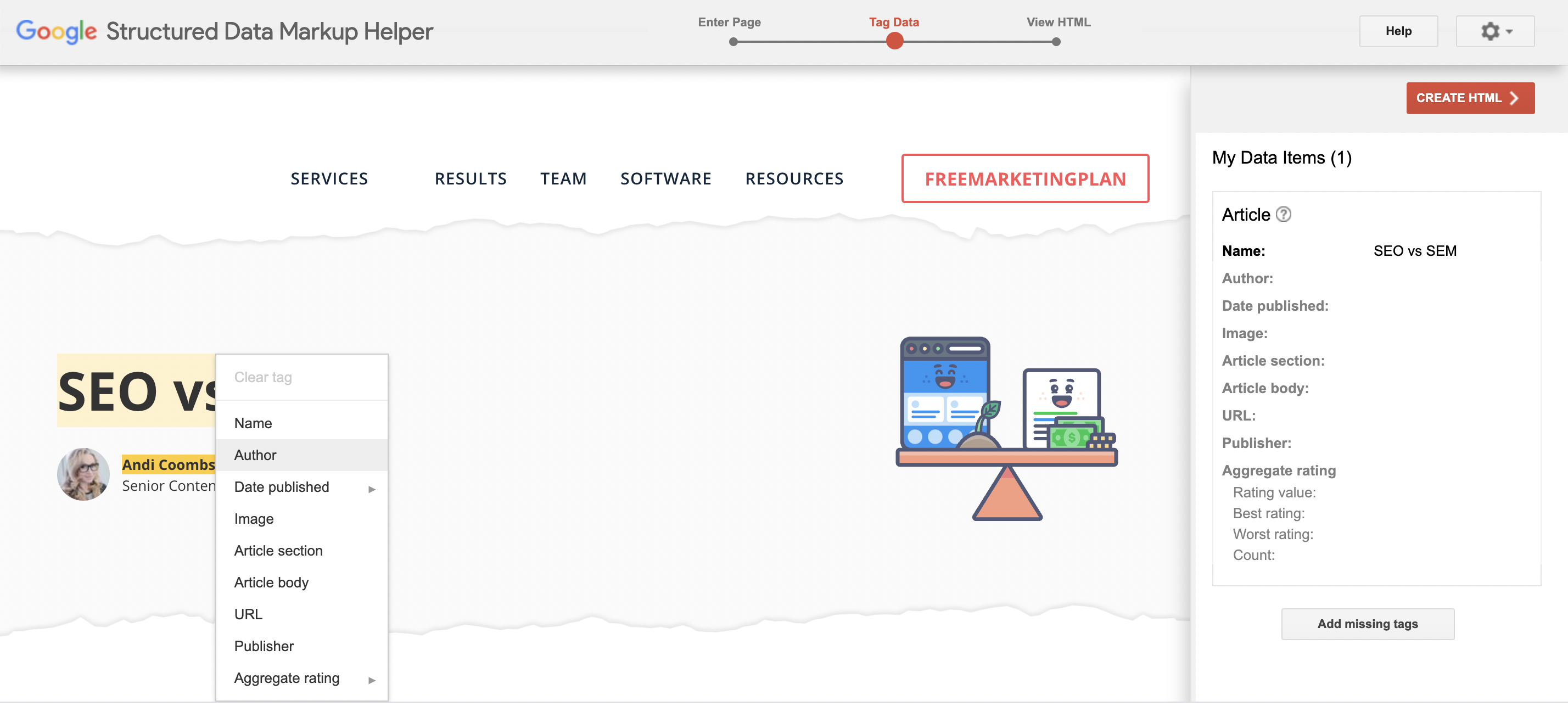

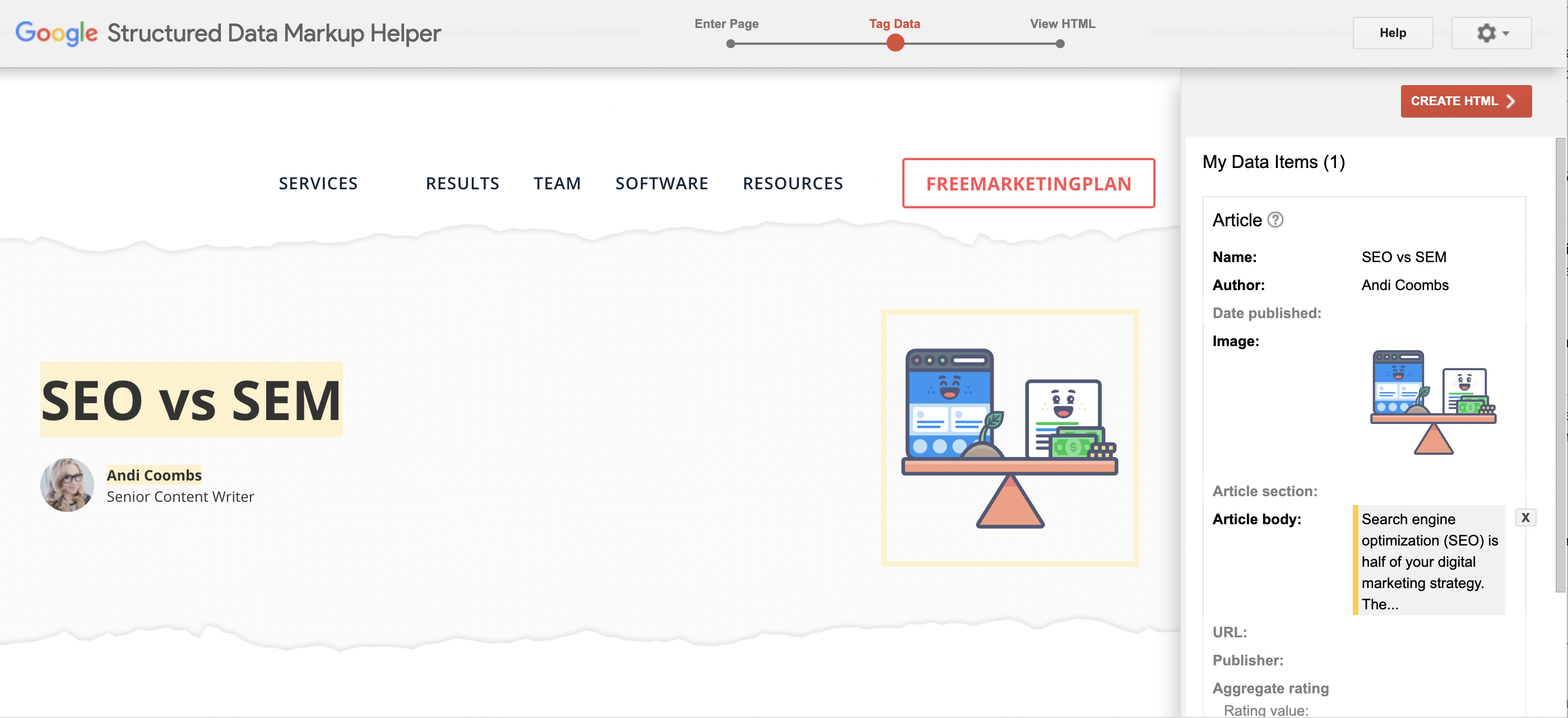

Step two: highlight the elements of the page (like Name, Author, and Image) and tag them. Continue highlighting elements of the page and select how they should be tagged.

You can find all of the schema properties here to manually add to the Markup Helper if you don’t see the tag you want in the list.

Step three: Click the Create HTML button to generate the HTML code to add to that web page.

Step four: download the code and add it to your web page.

When you’re done creating schema tags, and you’ve uploaded the code to your web page, see if you added your structured data correctly using the Structured Data Testing Tool.

At this point, you’ll feel like a search engine ranking factor schema markup mofo badass.

But does your page pass the mobile test?

6. Mobile-first

Your site on a phone is more important than your site on a laptop or desktop. Search engines evaluate your page based on how it renders on mobile. And if it renders looking like crap, your rank takes a hit (even if the desktop version looks amazing).

Preview the mobile version in your CMS (WordPress, Shopify, HubSpot, etc.) or use a tool like Google’s Mobile-friendly Test to see how easy it is for visitors to engage with your page on their phone.

If your page isn’t mobile-friendly, switch to a responsive theme and follow the tool’s suggestions to fix your mobile-friendliness.

How to improve your search engine ranking on Google

Optimize your site for the search engine ranking factors listed above, and then wait for Google to pay you ranking homage when and where it’s due.

The magic might not happen right away, but the more relevant you make your site for your audience, the more link love you’ll get in the process, and the more authority you’ll gain in the eyes of the crawlers that award the prizes.

Make your website secure, trustworthy, organized, mobile-friendly, and free of errors to get to that top-ranking spot sooner than later.

And when you make new content, make sure it’s so good it deserves a backlink and optimize that page so it’s easy to crawl and index.

We talk more about new content optimization in Chapter 2 of our SEO hub about on-page SEO.